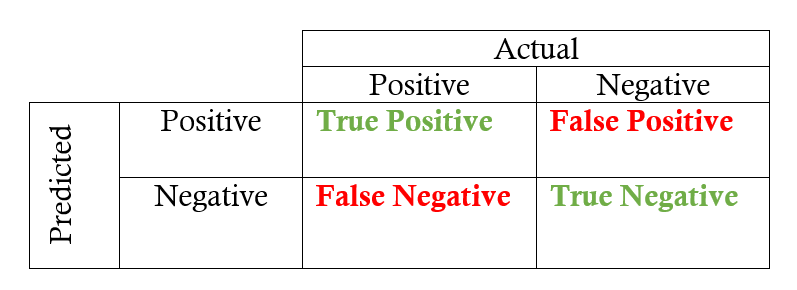

Confusion matrix

The confusion matrix is a 2X2 table that contains 4 outputs provided by the binary classifier. Various measures, such as error-rate, accuracy, specificity, sensitivity, precision and recall are derived from it. Confusion Matrix

A data set used for performance evaluation is called a test data set. It should contain the correct labels and predicted labels.

The predicted labels will exactly the same if the performance of a binary classifier is perfect.

The predicted labels usually match with part of the observed labels in real-world scenarios.

Basic measures derived from the confusion matrix

Error Rate = (FP+FN)/(P+N)

Accuracy = (TP+TN)/(P+N)

Sensitivity(Recall or True positive rate) = TP/P

Specificity(True negative rate) = TN/N

Precision(Positive predicted value) = TP/(TP+FP)

F-Score(Harmonic mean of precision and recall) = (1+b)(PREC.REC)/(b²PREC+REC) where b is commonly 0.5, 1, 2.

Basic measures derived from the confusion matrix

Error Rate = (FP+FN)/(P+N)

Accuracy = (TP+TN)/(P+N)

Sensitivity(Recall or True positive rate) = TP/P

Specificity(True negative rate) = TN/N

Precision(Positive predicted value) = TP/(TP+FP)

F-Score(Harmonic mean of precision and recall) = (1+b)(PREC.REC)/(b²PREC+REC) where b is commonly 0.5, 1, 2.

Basic measures derived from the confusion matrix

Error Rate = (FP+FN)/(P+N)

Accuracy = (TP+TN)/(P+N)

Sensitivity(Recall or True positive rate) = TP/P

Specificity(True negative rate) = TN/N

Precision(Positive predicted value) = TP/(TP+FP)

F-Score(Harmonic mean of precision and recall) = (1+b)(PREC.REC)/(b²PREC+REC) where b is commonly 0.5, 1, 2.

Basic measures derived from the confusion matrix

Error Rate = (FP+FN)/(P+N)

Accuracy = (TP+TN)/(P+N)

Sensitivity(Recall or True positive rate) = TP/P

Specificity(True negative rate) = TN/N

Precision(Positive predicted value) = TP/(TP+FP)

F-Score(Harmonic mean of precision and recall) = (1+b)(PREC.REC)/(b²PREC+REC) where b is commonly 0.5, 1, 2.

Comments

Post a Comment